Why Math Doesn’t Help Moral Dilemmas, Even the “Ethan Hunt Dilemma”

I like moral dilemmas because they are fun since they don’t admit of clear solutions.

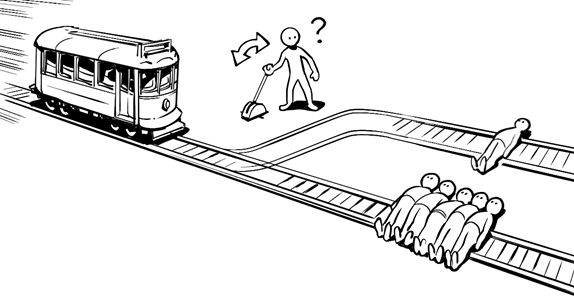

A simple model of moral dilemma is the train dilemma, think that a train is about to take a siding. On one side of the track we have one person and on the other side of the track we have 5 people. You need to decide which direction the train will go.

If this is your first time hearing this dilemma, perhaps a natural response is to choose to sacrifice one person over 5.

But the fun of this dilemma is that it revolves around values, not quantities.

Let’s say that the 5 people are terminally ill and have their medical diagnoses giving each one at most a few more months to live, and the other has a healthy child. Is it harder to decide now?

The dilemma is interesting because it forces you to place values on each side. If instead of five people, we had 500 people with terminal illnesses, would that change anything? What if there were 5,000?

The big question of the moral dilemma is, on which of the tracks there is something of greater value to be preserved for those who will choose the train’s destination.

This dilemma appears many times in the Doctor Who series, when the Doctor must decide “what cannot be decided”. A moment where the difficulty of this decision and his “role” as a decision-maker becomes clear is in the episode “The Girl Who Waited”. In it, the Doctor convinces a future version of his assistant, who has gone through immense suffering over several years, to help him save a younger version of herself, who has not suffered what made her who she is. To simplify, imagine that you stayed in a hard-to-survive place for 10 years, and that made you a very different person than you were before you entered there. You don’t regret surviving there, because it made you who you are today. Then someone talks you into helping a 10-year-old version of you not go to that place and go through what you went through. This person promises that you and your 10 year younger self will be able to co-exist in the world. So you accept, after all, you don’t want to cease to exist :3

However, after you believe in this person, at the final hour, this person locks the door and leaves you out, condemned to succumb there. The Doctor on the case explains that if his assistant entered the ship, the younger version would cease to exist, and then he would have made her suffer there for 10 years. Rory (the assistant’s husband, who travels with her) does not accept the decision, and says that he could not deceive and betray the version that stayed there for 10 years. But the Doctor leaves him with the lock on the door and says, it’s your decision, and Rory questions, that this is not fair, as that would make him equal to the Doctor… anyway, the point is that the most intense adventures of the Doctor involve exactly that, dealing with Moral Dilemmas.

Finally, I will present a “moral dilemma” that I invented, called “Ethan Hunt Dilemma”

In the movie Mission Impossible 4, the protagonist Ethan Hunt at one point seeks to prevent a weapon of mass destruction from being fired. This occurs from a scene driving a car on the streets of Europe at very high speed, every second is crucial to save the lives of people in that region. There are no indications that the weapon of mass destruction will not be launched if the protagonist does not reach to intercept it.

Basically Ethan Hunt seeks to prevent the 10 million people in that region from dying, an action scene from the movie XD

But then, as you turn a street, a street event is taking place. Your path is completely blocked by people dancing and partying in the middle of the street. The protagonist then brakes and lets the weapon of mass destruction escape, which for some reason that only occurs in Hollywood movies, causes the weapon not to be fired at that moment, giving the protagonist an “extra time” to stop him and take the film to its much acclaimed “happy ending”.

It may seem that this dilemma has a solution… because those 50 people that Ethan Hunt would run over to prevent the launch of that weapon of mass destruction, would save the other millions that live in that region. And if nothing was done, these 50 would be destroyed along with the other millions that would be hit by the weapon of mass destruction.

If we only think about the “quantities of lives”, this problem does have a solution, run over whoever it takes to stop the weapon. But is the death that you would generate or the suffering that you would generate in the people run over or close to them, be equivalent to saving them from this weapon? If the weapon generated instant, painless destruction, would it be coherent to bring about the agonizing death of 50 people to save 10 million that would include these 50?

We can think, for example, if there was a queue with 9 million people, who would need to be run over to stop the mass destruction weapon that would instantly kill 10 million people, including these 9 million. Does the decision change now?

Similarly, if it was necessary to cause unimaginable suffering to one of these 10 million people, to save the others, and if nothing was done this person along with the other 10 million would die, would you do it?

And with this moral dilemma, we arrive at post number 150 of this blog XD

Cover image credits to Rosy – The world is worth thousands of pictures by Pixabay